Immersive Ink - Transforming Reading Into an Interactive 3D Storytelling Experience

Immersive Ink - Transforming Reading Into an Interactive 3D Storytelling Experience

Product Strategy & Design

Creative Directing

Marketing

Video & Animation

UI/UX

3D Modeling & Prototyping

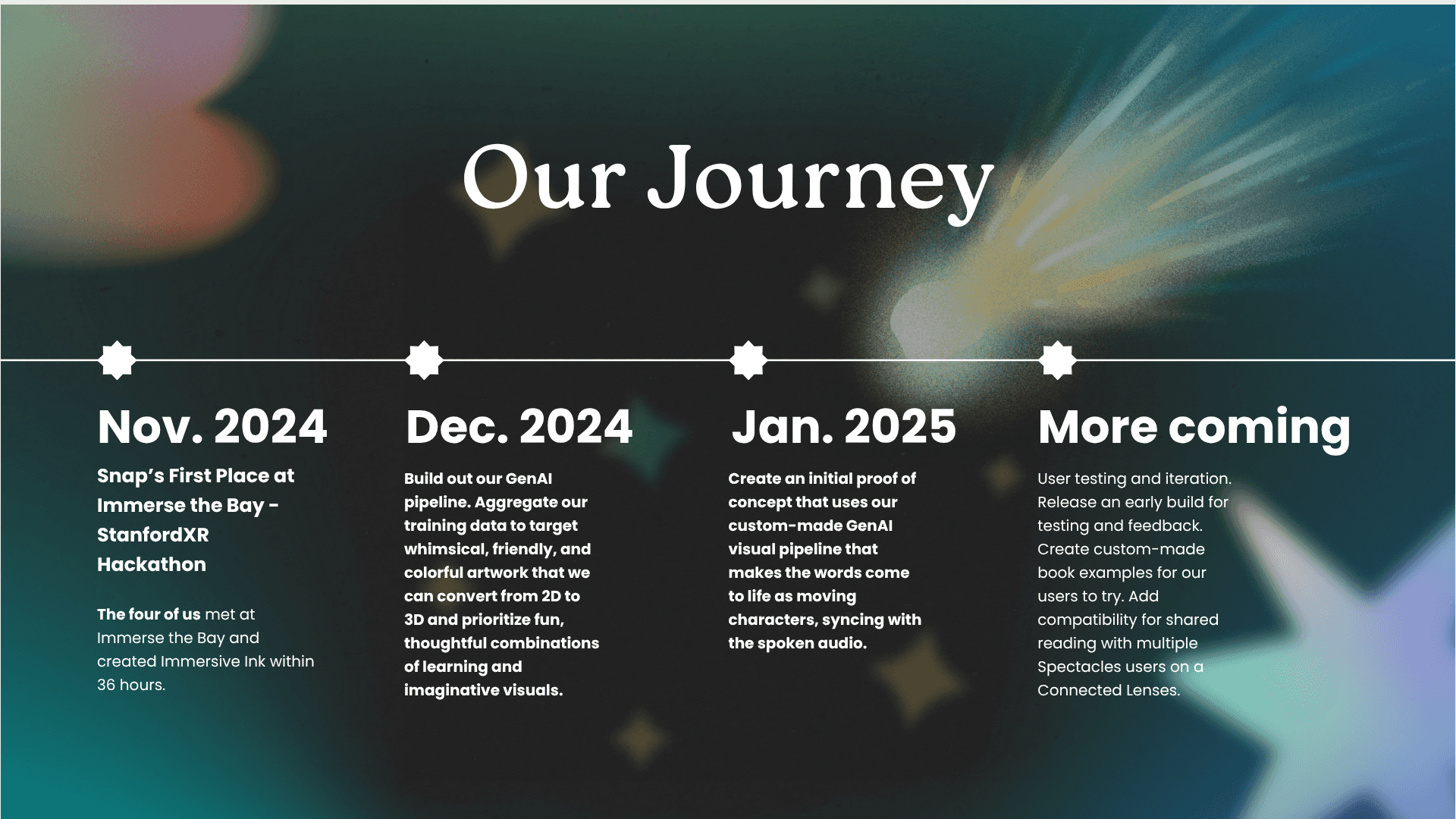

🎖️ SnapAR 1st Place at the Stanford Reality (XR) Hack - Immerse the Bay 2024

Overview

Immersive Ink is an AI + XR storytelling experience that turns ordinary text into vivid, interactive 3D worlds in real time.

Built for Snap Spectacles at a Snap AR hackathon, the project explores how generative AI and spatial computing can make learning and reading more engaging, playful, and emotionally resonant.

With a simple gesture, readers can capture a line of text, watch it transform into a 3D character or object, and hear the story come to life around them.

Role (36 Hours)

Product Designer · AR Interaction Designer · Creative Technologist

I led the user experience and interaction flow, designing how users capture, visualize, and interact with AI-generated 3D elements inside Spectacles. Collaborated closely with developers to bridge LLM, OCR, and Text-to-3D pipelines in real time using Lens Studio and Meshy API.

Tools

Snap Lens Studio · GPT LLM · Meshy API · Web OCR · Unity · Figma

🔥 Problem

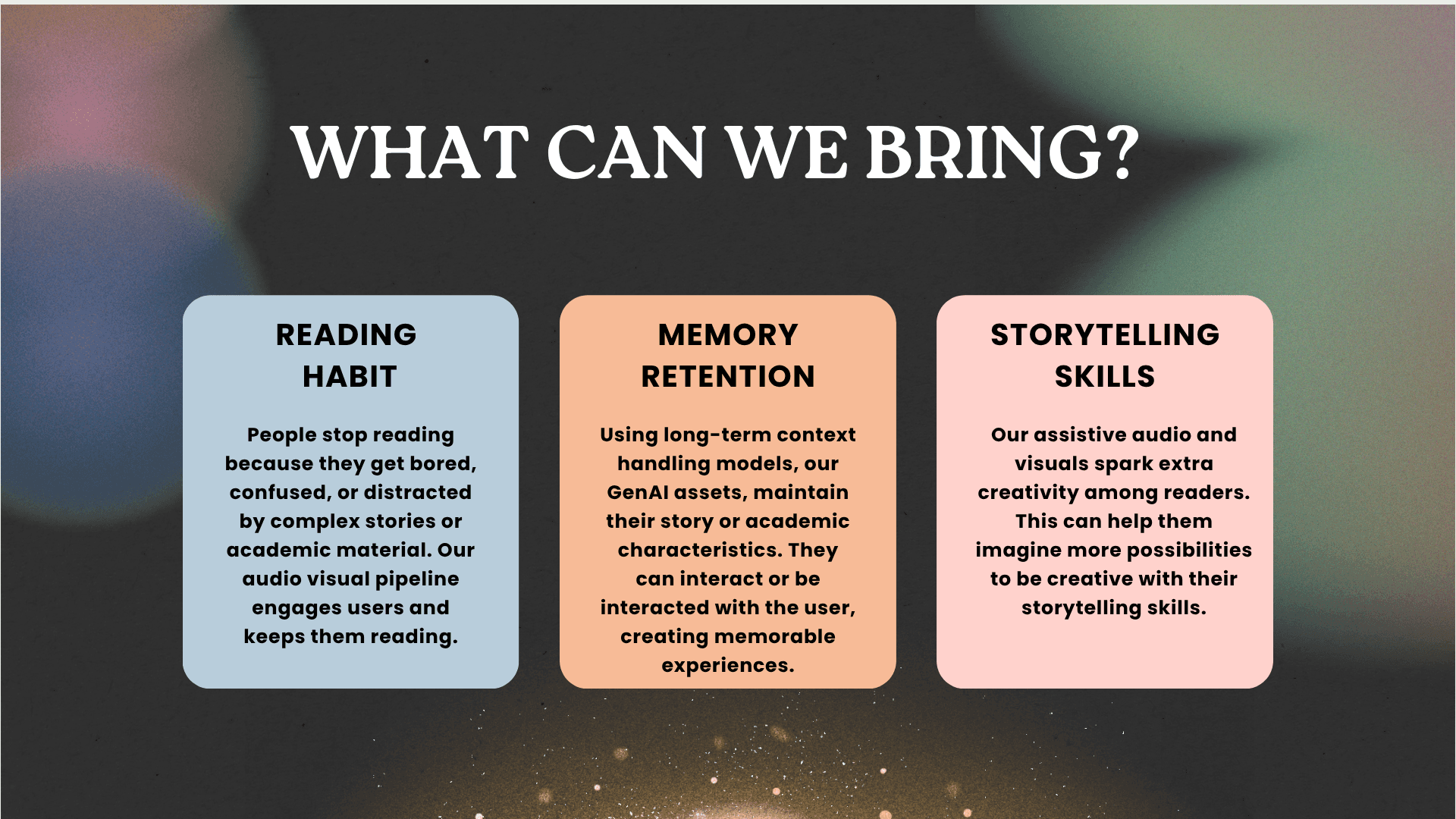

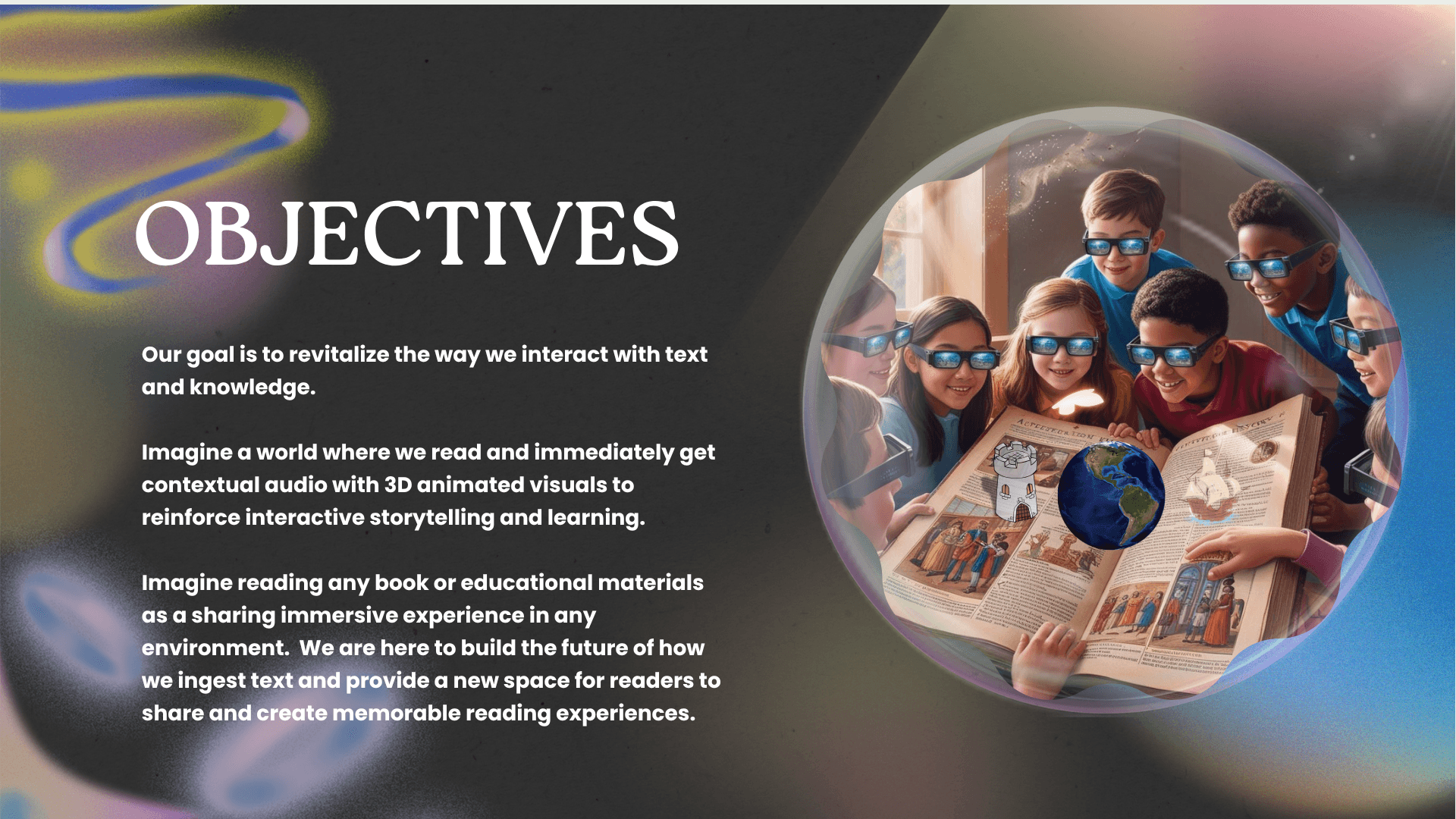

Reading is one of humanity’s oldest ways to learn — but traditional formats can struggle to hold attention in a digital world. Many learners, especially younger audiences, crave visual and immersive experiences that bring abstract ideas to life.

How might we use AI and XR to transform static words into living stories — making reading more interactive, emotional, and fun?

SnapAR 1st Place at the Stanford Reality (XR) Hack - Immerse the Bay 2024

Overview

Immersive Ink is an AI + XR storytelling experience that turns ordinary text into vivid, interactive 3D worlds in real time.

Built for Snap Spectacles at a Snap AR hackathon, the project explores how generative AI and spatial computing can make learning and reading more engaging, playful, and emotionally resonant. With a simple gesture, readers can capture a line of text, watch it transform into a 3D character or object, and hear the story come to life around them.

Role (36 Hours)

Product Designer · AR Interaction Designer · Creative Technologist

I led the user experience and interaction flow, designing how users capture, visualize, and interact with AI-generated 3D elements inside Spectacles. Collaborated closely with developers to bridge LLM, OCR, and Text-to-3D pipelines in real time using Lens Studio and Meshy API.

Tools

Snap Lens Studio · GPT LLM · Meshy API · Web OCR · Unity · Figma

🔥 Problem

Reading is one of humanity’s oldest ways to learn — but traditional formats can struggle to hold attention in a digital world. Many learners, especially younger audiences, crave visual and immersive experiences that bring abstract ideas to life.

How might we use AI and XR to transform static words into living stories — making reading more interactive, emotional, and fun?

Process

1. Inspiration

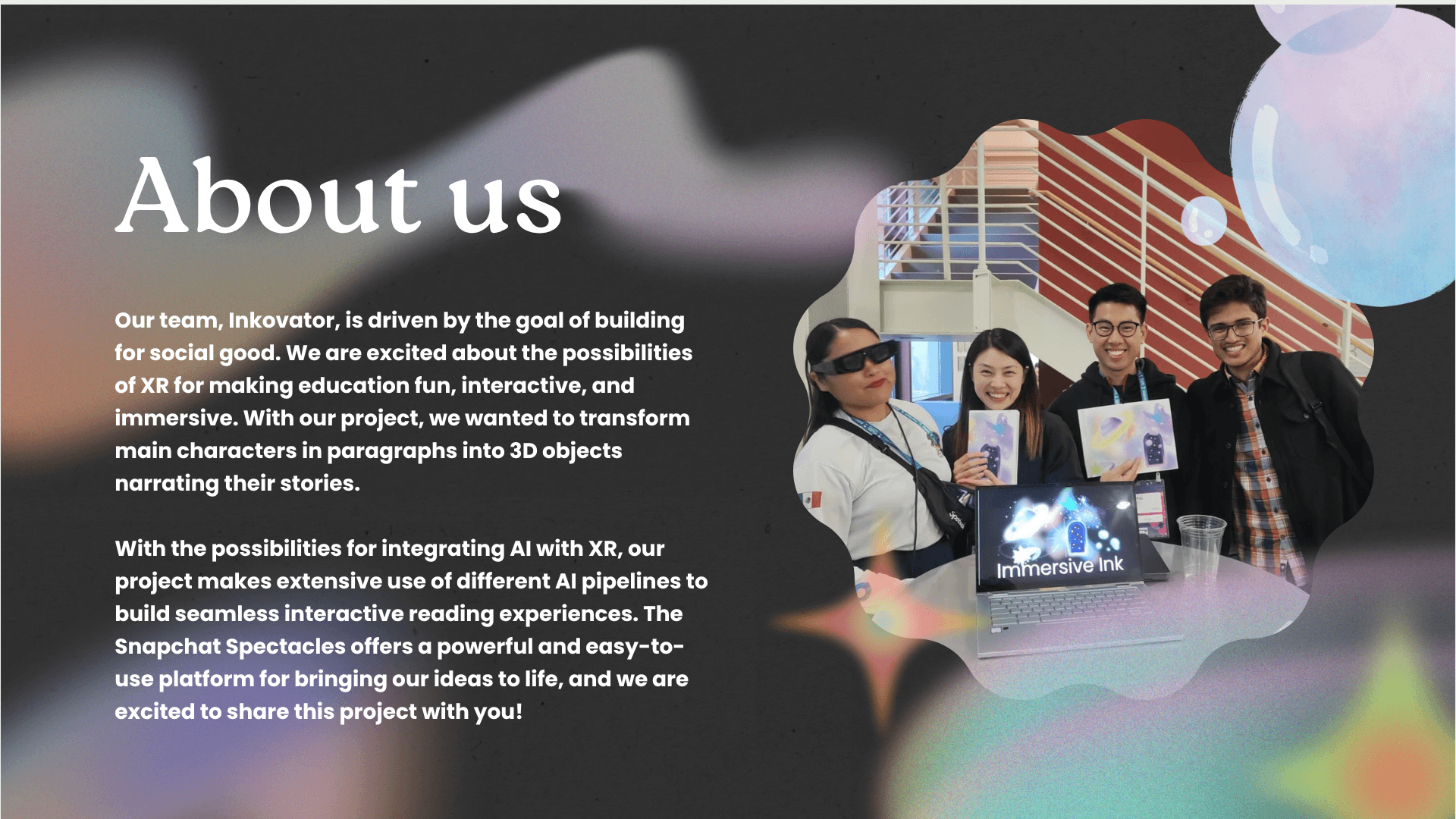

Our team was driven by the goal of building for social good — using XR to make education immersive, creative, and accessible. We wanted to turn dull text into living stories, letting words literally “jump off the page.”

The Snapchat Spectacles provided the perfect hardware platform to explore the next generation of interactive storytelling.

Process

1. Inspiration

Our team was driven by the goal of building for social good — using XR to make education immersive, creative, and accessible. We wanted to turn dull text into living stories, letting words literally “jump off the page.”

The Snapchat Spectacles provided the perfect hardware platform to explore the next generation of interactive storytelling.

2. Experience Design

When users wear Spectacles and launch Immersive Ink:

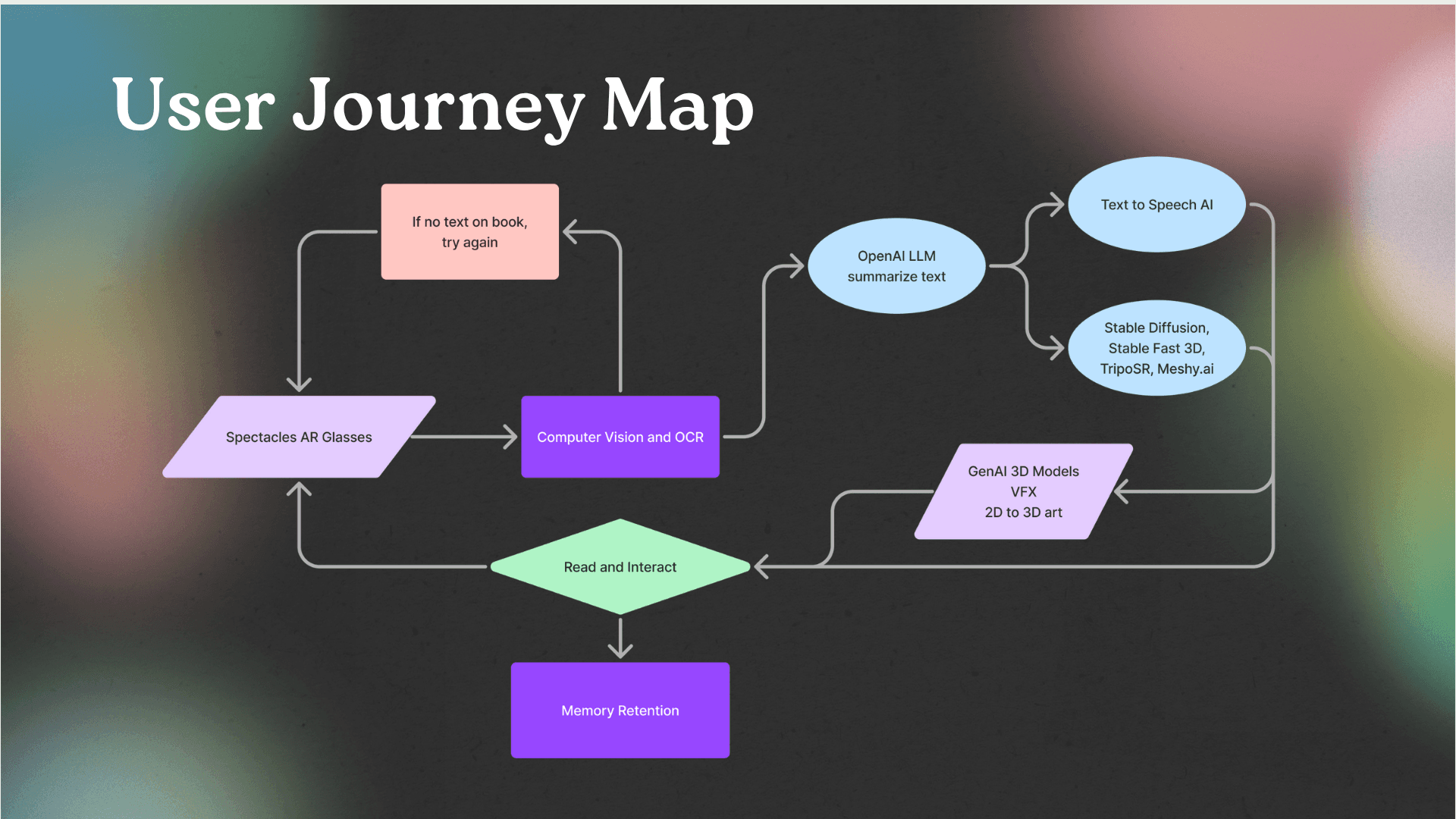

OCR Scanning: The app detects text in real time through the Spectacles’ camera feed.

Gesture Capture: A pinch gesture triggers capture and runs OCR to extract the text.

AI Processing:

The captured text is passed to a GPT LLM, which extracts the key noun or character.

The noun is then sent to Meshy’s Text-to-3D API to generate a model.

3D Visualization: Within 1–2 minutes, the object appears in front of the user as a 3D hologram.

Narration: AI-generated speech reads the text aloud, creating a mixed-reality storytelling moment where the story’s character “tells” its own tale.

Users can capture more text fragments to spawn additional 3D story elements — effectively building an immersive, evolving world as they read.

2. Experience Design

Each journal prompt is grounded in emotional intelligence research.

Each cloud represents a month, with “hanging pages” for new entries.

Parents and children record spoken reflections, which are converted through AI into 3D visual artifacts — personalized representations of shared memories.

Faded cubes represent past years, forming a spatial archive of family growth.

Through voice-based journaling, players co-create and revisit emotional milestones — a digital scrapbook reimagined in 3D.

3. Technical Architecture

Unity + Needle Engine: Handles real-time WebXR rendering and multiuser interactions.

Generative AI Pipeline: Converts speech → text → image → 3D model using OpenAI, Stability.ai, and custom scripts for latency management.

Network Replication: Enables persistent world-state for families to revisit their shared “memory worlds.”

3. Technical Architecture

Lens Studio OCR continuously detects text and allows real-time capture through a mirrored preview window.

GPT LLM Integration ensures meaningful object extraction even with imperfect OCR input.

Meshy Text-to-3D Pipeline handles GLTF model generation and returns meshes via async API calls.

Async UX Animations keep users engaged during generation delays, visualizing “hologram loading” before final textured models appear.

4. Challenges

OCR accuracy: Text capture often misaligned with user’s view; solved by adding a live camera widget and UI tracking.

LLM robustness: Needed fine-tuned prompting to extract only the key noun.

Latency: Text-to-3D generation took up to two minutes, requiring smart UX pacing and async polling to keep the app responsive.

4. Challenges

OCR accuracy: Text capture often misaligned with user’s view; solved by adding a live camera widget and UI tracking.

LLM robustness: Needed fine-tuned prompting to extract only the key noun.

Latency: Text-to-3D generation took up to two minutes, requiring smart UX pacing and async polling to keep the app responsive.

Conclusion

Immersive Ink successfully bridged GPT, OCR, and 3D generation pipelines into one seamless XR experience — something rarely achieved even in longer dev cycles.

It showcased how AI and XR can merge storytelling, education, and creativity, earning strong recognition from the Snap AR team for both technical depth and emotional impact.

What’s Next

The team envisions expanding Immersive Ink into an educational platform where:

AI-generated characters animate and act out the story.

Character-specific voices narrate each tale.

Real-time 3D mesh generation creates instant immersion.